Robust Deep Object Tracking against Adversarial AttacksShuai Jia1 Chao Ma1 Yibing Song2 Xiaokang Yang1 Ming-Hsuan Yang31 MoE Key Lab of Artificial Intelligence, AI Institute, Shanghai Jiao Tong University 2 Tencent AI Lab 3 University of California at Merced |

|

Abstract

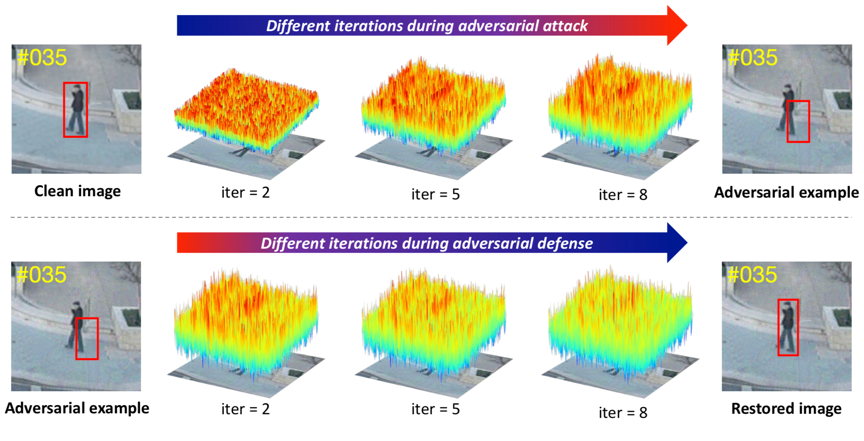

Addressing the vulnerability of deep neural networks (DNNs) has attracted significant attention in recent years. While recent studies on adversarial attack and defense mainly reside in a single image, few efforts have been made to perform temporal attacks against video sequences. As the temporal consistency between frames is not considered, existing adversarial attack approaches designed for static images do not perform well for deep object tracking. In this work, we generate adversarial examples on top of video sequences to improve the tracking robustness against adversarial attacks under white-box and black-box settings. To this end, we consider motion signals when generating lightweight perturbations over the estimated tracking results frame-by-frame. For the white-box attack, we generate temporal perturbations via known trackers to degrade significantly the tracking performance. We transfer the generated perturbations into unknown targeted trackers for the black-box attack to achieve transferring attacks. Furthermore, we train universal adversarial perturbations and directly add them into all frames of videos, improving the attack effectiveness with minor computational costs. On the other hand, we sequentially learn to estimate and remove the perturbations from input sequences to restore the tracking performance. We apply the proposed adversarial attack and defense approaches to state-of-the-art tracking algorithms. Extensive evaluations on large-scale benchmark datasets, including OTB, VOT, UAV123, and LaSOT, demonstrate that our attack method degrades the tracking performance significantly with favorable transferability to other backbones and trackers. Notably, the proposed defense method restores the original tracking performance to some extent and achieves additional performance gains when not under adversarial attacks.

Demo

|

|

|

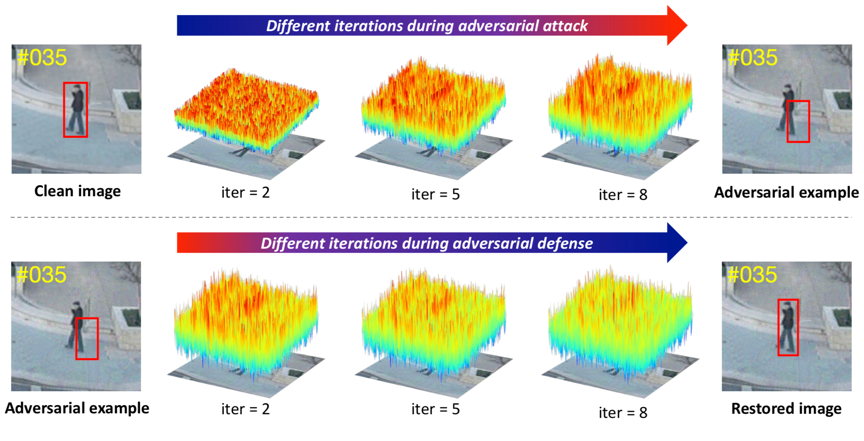

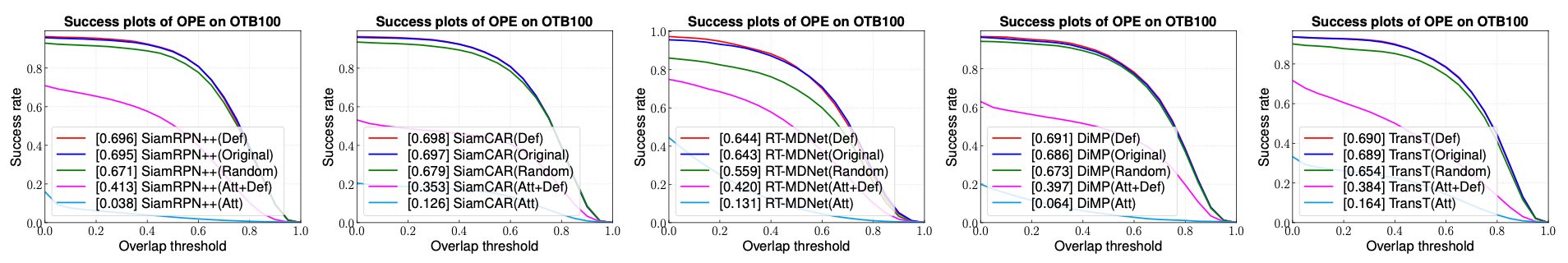

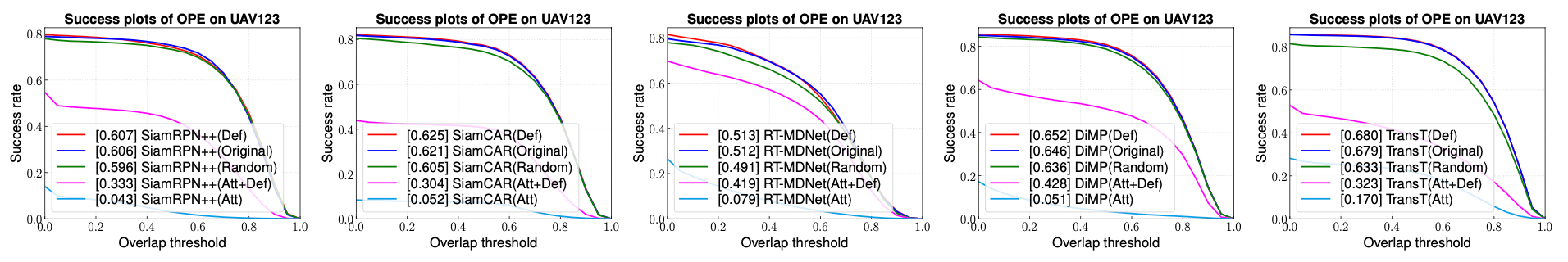

Experiments

|

Tracking performance of the adversarial attack and defense methods on the OTB100 dataset |

|

Tracking performance of the adversarial attack and defense methods on the UAV123 dataset |

|

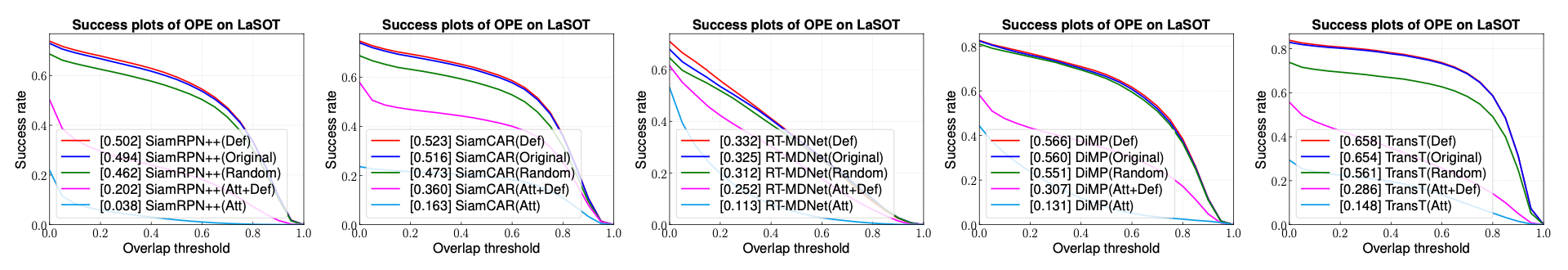

Tracking performance of the adversarial attack and defense methods on the LaSOT dataset |

Downloads

The source code is available at [Code].

All raw results are available at [Raw_results].

More demos are available at [Video].

Reference

B. Li, W. Wu, Q. Wang, F. Zhang, J. Xing, and J. Yan, Siamrpn++: Evolution of siamese visual tracking with very deep networks, in IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2019.

D. Guo, J. Wang, Y. Cui, Z. Wang, and S. Chen, Siamcar: Siamese fully convolutional classification and regression for visual tracking, in IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2020.

I. Jung, J. Son, M. Baek, and B. Han, Real-time mdnet, in European Conference on Computer Vision (ECCV), 2018.

X. Chen, B. Yan, J. Zhu, D. Wang, X. Yang, and H. Lu, Transformer tracking, in IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2021.

S. Jia, C. Ma, Y. Song, and X. Yang, Robust tracking against adversarial attacks, in European Conference on Computer Vision (ECCV), 2020.