Towards Unified Defense for Face Forgery and Spoofing Attacks

|

|

Abstract

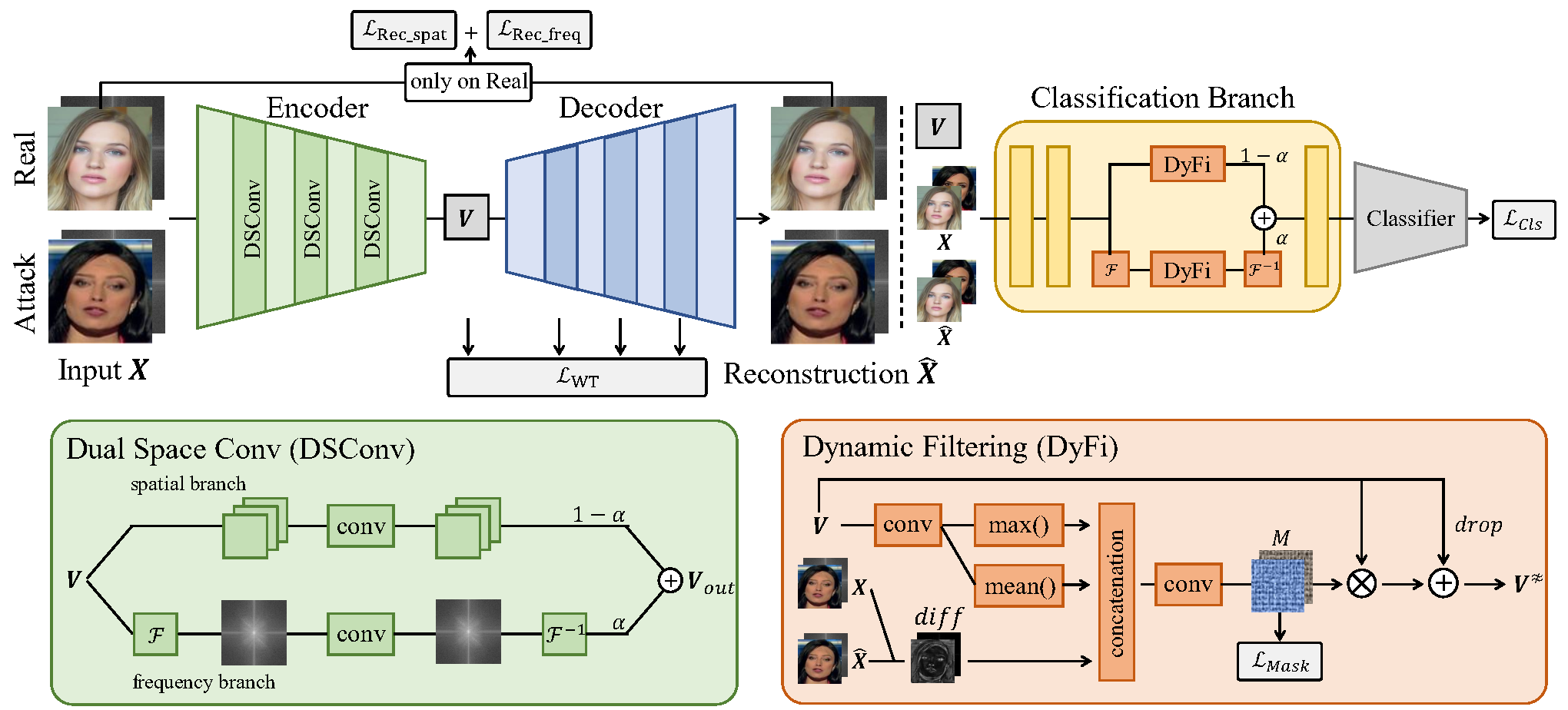

Real-world face recognition systems are vulnerable to diverse face attacks, ranging from digitally manipulated artifacts to physically crafted spoofing attacks. Existing works primarily focus on utilizing an image classification network to address one type of attack but disregarding another one. However, in real-world scenarios, face recognition systems always encounter diverse simultaneous attacks, rendering the aforementioned single-attack detecting solution ineffective. Besides, excessive reliance on a classifier might easily fail when encountering face attacks with unknown patterns, as the category-level difference learned by classification backbones cannot generalize well to new attacks. Considering that real data are captured from actual individuals, while attack samples are generated by various distinct techniques, our focus is on extracting compact representations of real faces. This approach allows us to identify the fundamental differences between genuine and attack images, enabling us to address both manipulated artifacts and spoofing attacks simultaneously. Concretely, we propose a dual space reconstruction learning framework that models the commonalities of genuine faces in both spatial and frequency domains. With the learned characteristics of real faces, the model is more likely to segregate diverse attack samples as outliers from genuine images. Besides, we introduce a dynamic filtering module that filters out the redundant information brought in from the reconstruction and enhances the critical divergence between the real and the attack to achieve better classification features. Since the training samples only cover limited style variations which hampers the generalization to unseen domains, we further design a consistency regularized training strategy that mimics distribution shifts during training and imposes specific constraints to encourage style-irrelevant features. Moreover, in view of the lack of accessible benchmarks for unified evaluation of the detection competence against both face forgery and spoofing attacks, we set up a new challenging benchmark, named UniAttack, to foster the exploration of effective solutions to face attack detection. Both qualitative and quantitative results from existing and proposed benchmarks unequivocally demonstrate the superiority of our methods over state-of-the-art approaches.

New Benchmark: UniAttack

In real-world scenarios, the integrity of deployed face recognition systems is threatened by both digitally manipulated face forgeries and physically crafted spoofing samples. Regrettably, the current research focus predominantly revolves around singular approaches that target either face forgeries or spoofing samples, thereby neglecting the crucial aspect of comprehensive defense against these malicious attacks. This overlook in unified detection strategies can be attributed, in part, to the absence of clearly defined benchmarks for evaluating model performance concerning both face forgery and spoofing attacks. In an effort to advance the development of effective countermeasures against a diverse range of facial attacks, we present a novel benchmark, named UniAttack, specifically designed for the unified detection of face forgery and spoofing attacks. The establishment of such a benchmark aims to foster the exploration of robust and generalizable solutions to enhance the security and reliability of face recognition systems in the presence of sophisticated malicious threats.

|

The proposed UniAttack benchmark contains three evaluation protocols. Protocol I is designed for intra-dataset assessment. Protocol II is used for evaluating models' competence in a cross-dataset scenario. Protocol III is for cross-type evaluation in which models are tested on unseen attack methods.

Experiments

|

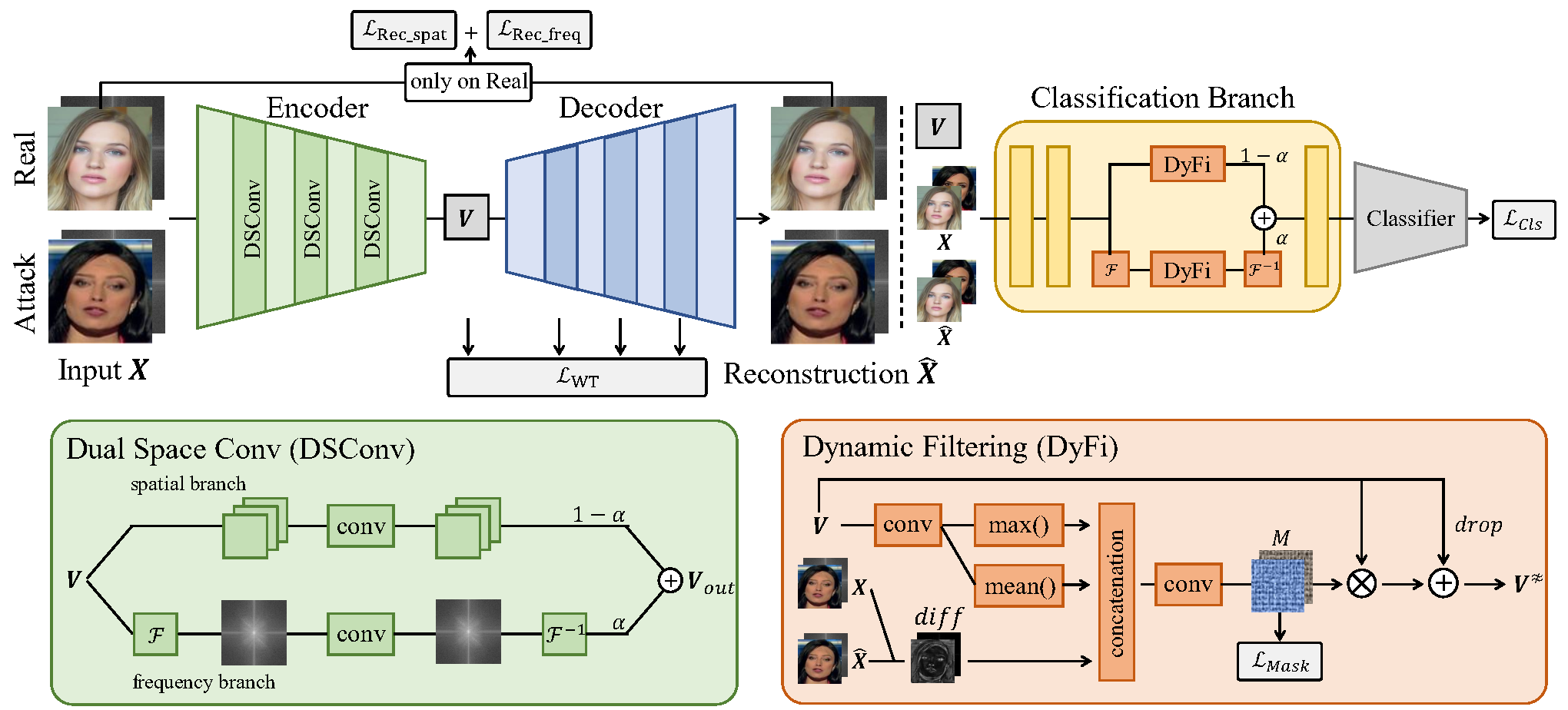

Intra-dataset testing comparisons on FaceForensics++. Our method performs favorably over current state-of-the-art approaches. |

|

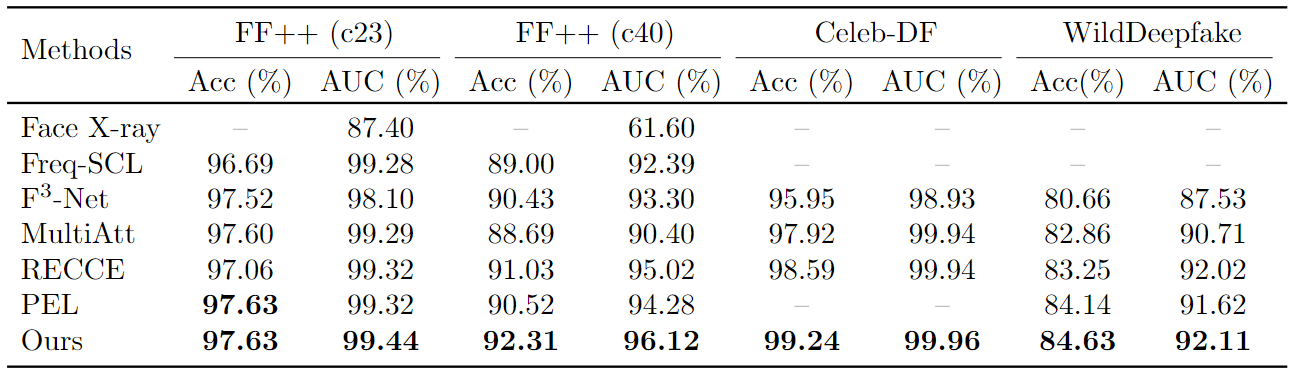

Cross-dataset testing on OULU-NPU, CASIA-MFSD, Replay-Attack, and MSU-MFSD. |

Visual Results

|

Reconstruction visualization. (a): Experiments in the intra-dataset testing on FaceForensics++; (b): Experiments in the cross-dataset testing on I&C&M to O protocol. It is observed that the real faces can be well reconstructed with little blur, while the attack faces cannot be restored soundly, as evidenced by the large reconstruction difference in both spatial and frequency spaces. This figure is best viewed in color with zoomed-in. |

|

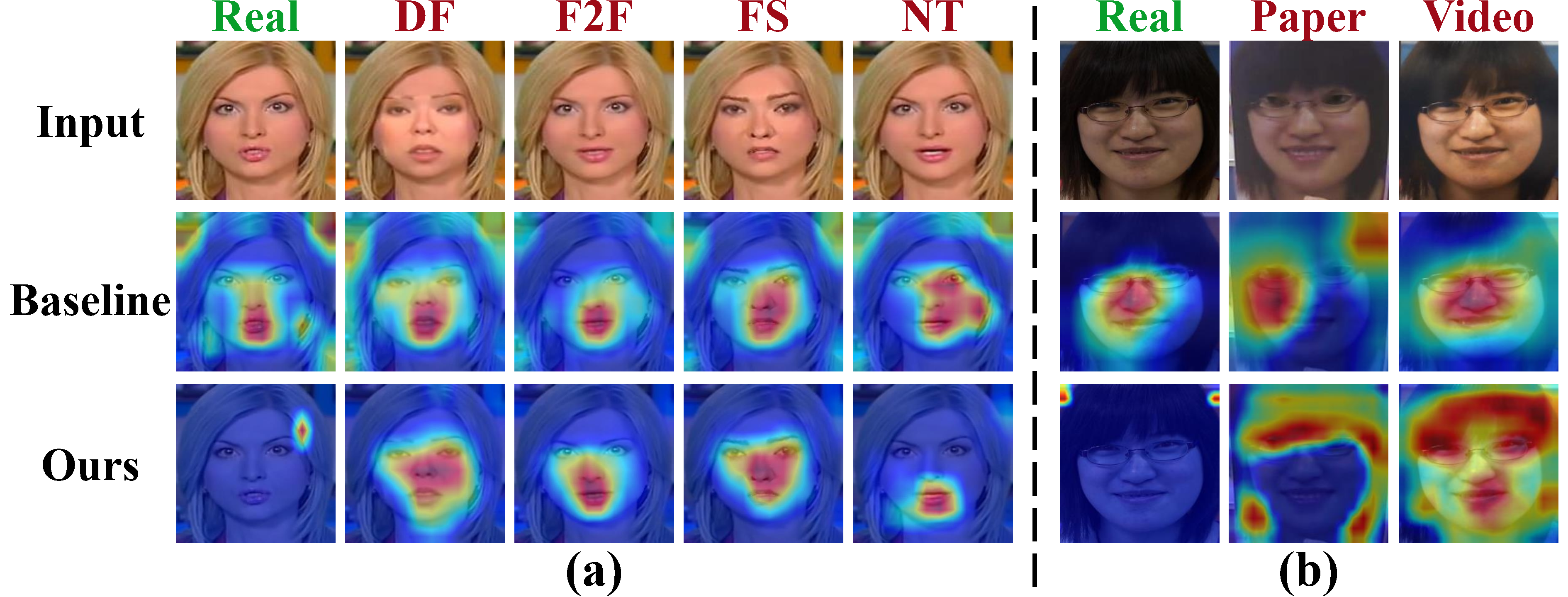

The Grad-CAM visualization. (a): Experiments in the intra-dataset testing on FaceForensics++; (b): Experiments in the cross-dataset testing on O&M&I to C protocol. Samples in the first row are the input images, while those in the second and the third rows are Grad-CAM heatmaps of the baseline method and our approach, respectively. Best viewed in color. |

Downloads

The source code for our conference version is available at [Code].

The source code for our journal version will be available.

Pre-processed UniAttack data will be available.

Reference

Lingzhi Li, Jianmin Bao, Ting Zhang, Hao Yang, Dong Chen, Fang Wen, and Baining Guo. Face X-ray for more general face forgery detection. In CVPR, 2020.

Jiaming Li, Hongtao Xie, Jiahong Li, Zhongyuan Wang, and Yongdong Zhang. Frequency-aware discriminative feature learning supervised by single-center loss for face forgery detection. In CVPR, 2021.

Yuyang Qian, Guojun Yin, Lu Sheng, Zixuan Chen, and Jing Shao. Thinking in frequency: Face forgery detection by mining frequency-aware clues. In ECCV, 2020.

Hanqing Zhao, Wenbo Zhou, Dongdong Chen, Tianyi Wei, Weiming Zhang, and Nenghai Yu. Multi-attentional deepfake detection. In CVPR, 2021.

Junyi Cao, Chao Ma, Taiping Yao, Shen Chen, Shouhong Ding, Xiaokang Yang. End-to-end reconstruction-classification learning for face forgery detection. In CVPR, 2022.

Qiqi Gu, Shen Chen, Taiping Yao, Yang Chen, Shouhong Ding, and Ran Yi. Exploiting fine-grained face forgery clues via progressive enhancement learning. In AAAI, 2022.

Yunpei Jia, Jie Zhang, Shiguang Shan, and Xilin Chen. Single-side domain generalization for face anti-spoofing. In CVPR, 2020.

Zhuo Wang, Zezheng Wang, Zitong Yu, Weihong Deng, Jia- hong Li, Tingting Gao, and Zhongyuan Wang. Domain gen- eralization via shuffled style assembly for face anti-spoofing. In CVPR, 2022.

Chien-Yi Wang, Yu-Ding Lu, Shang-Ta Yang, and Shang-Hong Lai. PatchNet: A simple face anti-spoofing frame- work via fine-grained patch recognition. In CVPR, 2022.

Yunpei Jia, Jie Zhang, Shiguang Shan. Dual-branch meta-learning network with distribution alignment for face anti-spoofing. TIFS, 2021.

Fangling Jiang, Qi Li, Pengcheng Liu, Xiang-Dong Zhou, Zhenan Sun. Adversarial learning domain-invariant conditional features for robust face anti-spoofing. IJCV, 2023.